인공지능 공부/컴퓨터 비전

(딥러닝) Face Mask Detection

앨런튜링_

2022. 3. 24. 08:26

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import matplotlib.patches as mpatches

import seaborn as sns

from collections import Counter

import os

import xmltodict

import torch

from torchvision import datasets,transforms,models

from torch.utils.data import Dataset,DataLoader

from PIL import Image

import sys

import torch.optim as optim

import xmltodict

print("PyTorch Version: ",torch.__version__)img_names = []

xml_names = []

for dirname, _, filenames in os.walk('Kaggle/input'):

for filename in filenames:

if os.path.join(dirname, filename)[-3:]!="xml":

img_names.append(filename)

else :

xml_names.append(filename)path_annotations = "Kaggle/input/annotations/"

listing=[]

for i in img_names[:]:

with open(path_annotations+i[:-4]+".xml") as fd:

doc=xmltodict.parse(fd.read())

temp=doc["annotation"]["object"]

if type(temp)==list:

for i in range(len(temp)):

listing.append(temp[i]["name"])

else:

listing.append(temp["name"])

Items = Counter(listing).keys()

values = Counter(listing).values()

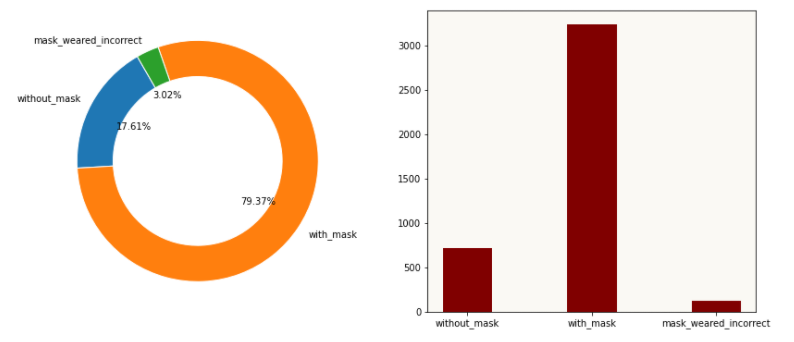

print(Items,'\n',values)fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(14,6))

background_color = '#faf9f4'

ax1.set_facecolor(background_color)

ax2.set_facecolor(background_color)

ax1.pie(values, wedgeprops = dict(width=0.3, edgecolor='w'), labels=Items, radius=1, startangle=120, autopct='%1.2f%%')

ax2 = plt.bar(Items, list(values), color = 'maroon', width = 0.4)

path_image="Kaggle/input/images/"

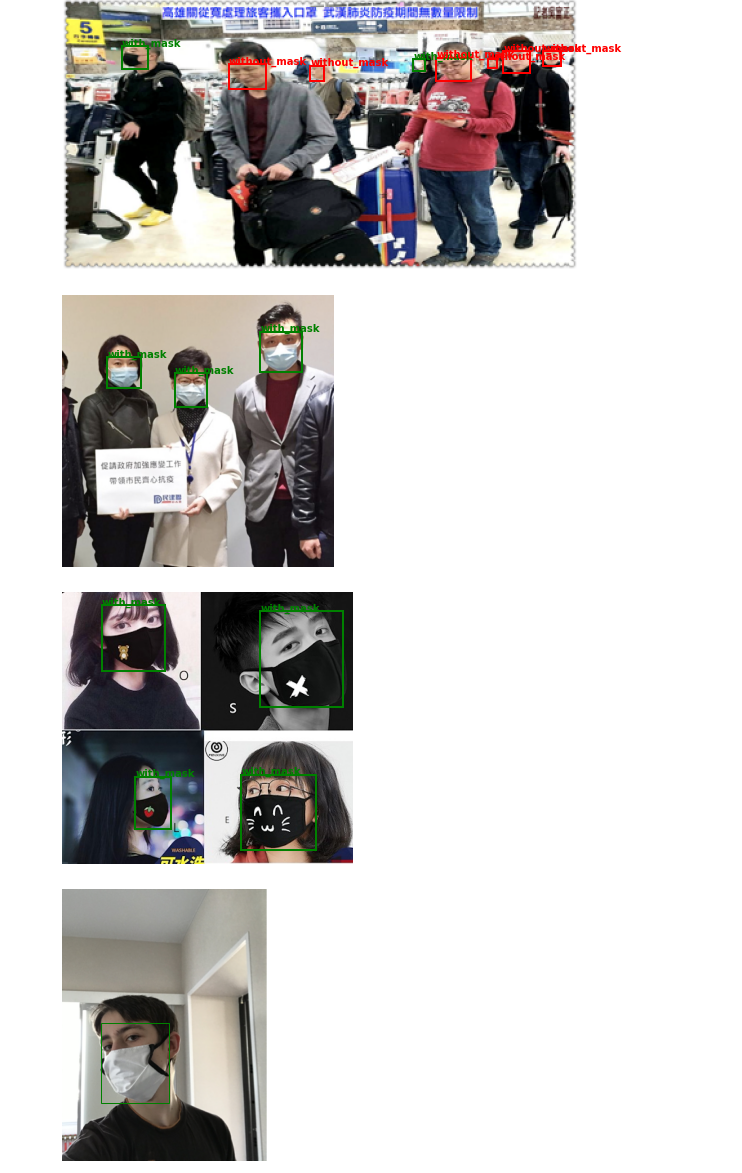

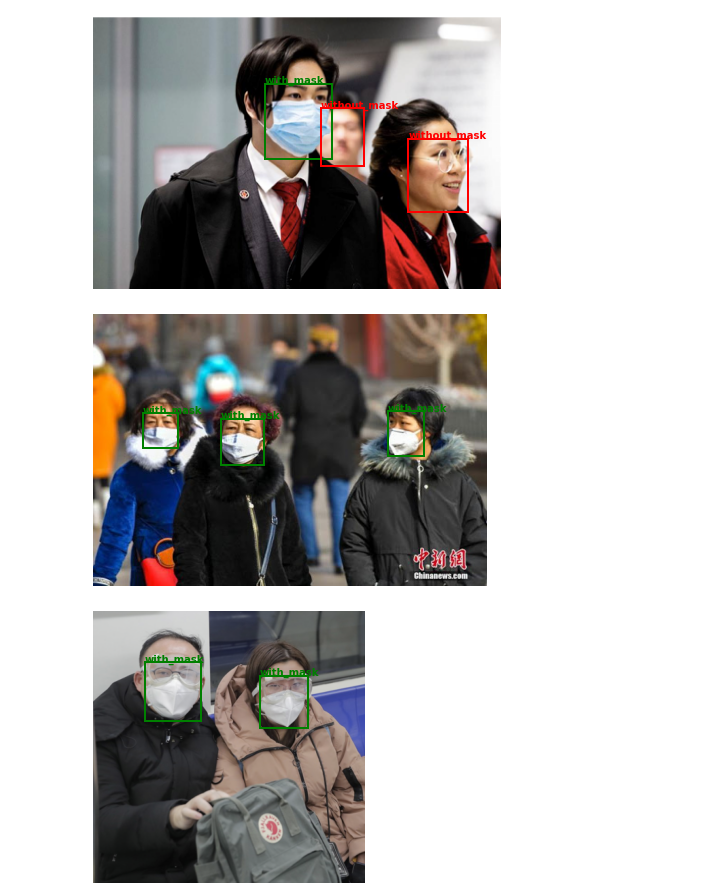

def face_cas(img):

with open(path_annotations+img[:-4]+".xml") as fd:

doc=xmltodict.parse(fd.read())

image=plt.imread(os.path.join(path_image+img))

fig,ax=plt.subplots(1)

ax.axis("off")

fig.set_size_inches(10,5)

temp=doc["annotation"]["object"]

if type(temp)==list:

for i in range(len(temp)):

###with_mask

if temp[i]["name"]=="with_mask":

x,y,w,h=list(map(int,temp[i]["bndbox"].values()))

mpatch=mpatches.Rectangle((x,y),w-x,h-y,linewidth=1, edgecolor='g',facecolor="none",lw=2,)

ax.add_patch(mpatch)

rx, ry = mpatch.get_xy()

ax.annotate("with_mask", (rx, ry), color='green', weight='bold', fontsize=10, ha='left', va='baseline')

###without_mask

if temp[i]["name"]=="without_mask":

x,y,w,h=list(map(int,temp[i]["bndbox"].values()))

mpatch=mpatches.Rectangle((x,y),w-x,h-y,linewidth=1, edgecolor='r',facecolor="none",lw=2,)

ax.add_patch(mpatch)

rx, ry = mpatch.get_xy()

ax.annotate("without_mask", (rx, ry), color='red', weight='bold', fontsize=10, ha='left', va='baseline')

###mask_weared_incorrect

if temp[i]["name"]=="mask_weared_incorrect":

x,y,w,h=list(map(int,temp[i]["bndbox"].values()))

mpatch=mpatches.Rectangle((x,y),w-x,h-y,linewidth=1, edgecolor='y',facecolor="none",lw=2,)

ax.add_patch(mpatch)

rx, ry = mpatch.get_xy()

ax.annotate("mask_weared_incorrect", (rx, ry), color='yellow', weight='bold', fontsize=10, ha='left', va='baseline')

else:

x,y,w,h=list(map(int,temp["bndbox"].values()))

edgecolor={"with_mask":"g","without_mask":"r","mask_weared_incorrect":"y"}

mpatch=mpatches.Rectangle((x,y),w-x,h-y,linewidth=1, edgecolor=edgecolor[temp["name"]],facecolor="none",)

ax.imshow(image)

ax.add_patch(mpatch)

fun_images = img_names.copy()

for i in range(1,8):

face_cas(fun_images[i])

options={"with_mask":0,"without_mask":1,"mask_weared_incorrect":2}

def dataset_creation(image_list):

image_tensor=[]

label_tensor=[]

for i,j in enumerate(image_list):

with open(path_annotations+j[:-4]+".xml") as fd:

doc=xmltodict.parse(fd.read())

if type(doc["annotation"]["object"])!=list:

temp=doc["annotation"]["object"]

x,y,w,h=list(map(int,temp["bndbox"].values()))

label=options[temp["name"]]

image=transforms.functional.crop(Image.open(path_image+j).convert("RGB"), y,x,h-y,w-x)

image_tensor.append(my_transform(image))

label_tensor.append(torch.tensor(label))

else:

temp=doc["annotation"]["object"]

for k in range(len(temp)):

x,y,w,h=list(map(int,temp[k]["bndbox"].values()))

label=options[temp[k]["name"]]

image=transforms.functional.crop(Image.open(path_image+j).convert("RGB"),y,x,h-y,w-x)

image_tensor.append(my_transform(image))

label_tensor.append(torch.tensor(label))

final_dataset=[[k,l] for k,l in zip(image_tensor,label_tensor)]

return tuple(final_dataset)

my_transform=transforms.Compose([transforms.Resize((226,226)),

transforms.ToTensor()])

mydataset=dataset_creation(img_names)train_size=int(len(mydataset)*0.7)

test_size=len(mydataset)-train_size

print('Length of dataset is', len(mydataset), '\nLength of training set is :',train_size,'\nLength of test set is :', test_size)trainset,testset=torch.utils.data.random_split(mydataset,[train_size,test_size])

train_dataloader = DataLoader(dataset = trainset, batch_size = 32, shuffle = True, num_workers = 4)

test_dataloader = DataLoader(dataset = testset, batch_size = 32, shuffle = True, num_workers = 4)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

import warnings

warnings.filterwarnings("ignore", category=UserWarning)

train_features_np=train_features.numpy()

fig=plt.figure(figsize=(25,4))

for idx in np.arange(3):

ax=fig.add_subplot(2,int(20/2),idx+1,xticks=[],yticks=[])

plt.imshow(np.transpose(train_features_np[idx],(1,2,0)))

model=models.resnet34(pretrained=True)

for param in model.parameters():

param.requires_grad=False

import torch.nn as nn

n_inputs = model.fc.in_features

last_layer = nn.Linear(n_inputs, 3)

model.fc.out_features=last_layer

print('reinitialize model with output features as 3 : ', model.fc.out_features)

features_resnet34 = []

for key,value in model._modules.items():

features_resnet34.append(value)

features_resnet34

conv_param = 64 * 128 * 3 * 3

print(' Number of Parameters for conv2D is :', conv_param )

criterion=nn.CrossEntropyLoss()

optimizer=optim.SGD(model.parameters(),lr=0.001,momentum=0.9)

param.requires_grad=True

ct = 0

for child in model.children():

ct += 1

if ct < 7:

for param in child.parameters():

param.requires_grad = False

for epoch in range(1,2):

running_loss = 0.0

train_losses = []

for i, (inputs, labels) in enumerate(train_dataloader):

#inputs = inputs.to(device)

#labels = labels.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

if i % 20 == 19:

print("Epoch {}, batch {}, training loss {}".format(epoch, i+1,running_loss/20))

running_loss = 0.0

print('\nFinished Training')