인공지능 공부/NLP 연구

(NLP) Prompt Learning 발표 (2022-04-27)

앨런튜링_

2022. 4. 27. 13:28

- Attention Is All You Need

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

- ALBERT: A Lite BERT for Self-supervised Learning of Language Representations

- Improving language understanding by generative pre-training

Language Models are Unsupervised Multitask Learners

- https://d4mucfpksywv.cloudfront.net/better-language-models/language_models_are_unsupervised_multitask_learners.pdf

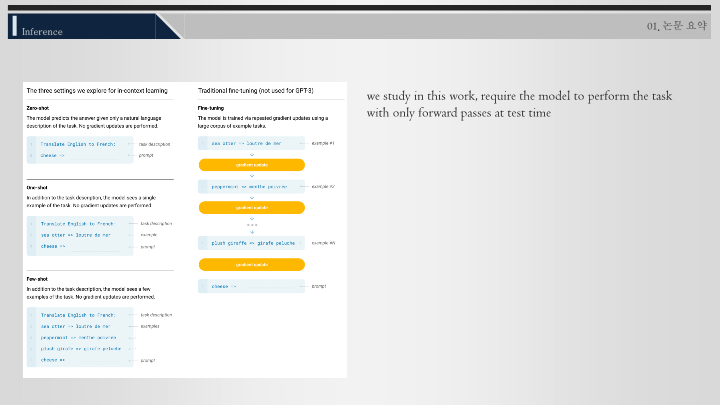

- Language Models are Few-Shot Learners

- Exploiting Cloze Questions for Few Shot Text Classification and Natural Language Inference

- It's Not Just Size That Matters: Small Language Models Are Also Few-Shot Learners

- GPT Understands, Too