- 논문 리스트 정리

- Attention Is All You Need

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

- ALBERT: A Lite BERT for Self-supervised Learning of Language Representations

- Improving language understanding by generative pre-training

- Language Models are Unsupervised Multitask Learners

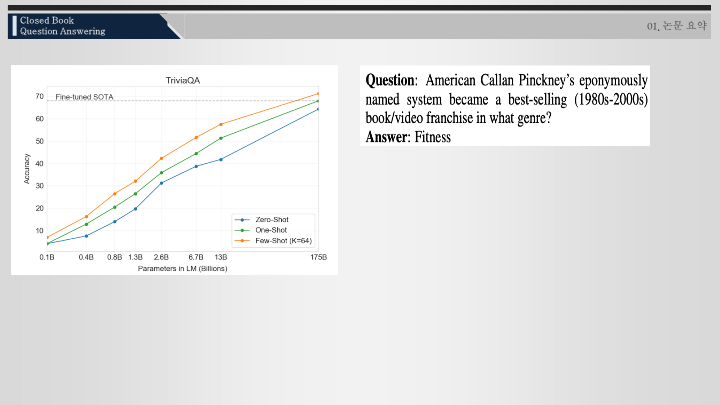

- Language Models are Few-Shot Learners

- Exploiting Cloze Questions for Few Shot Text Classification and Natural Language Inference

- It's Not Just Size That Matters: Small Language Models Are Also Few-Shot Learners

- AutoPrompt: Eliciting Knowledge from Language Models with Automatically Generated Prompts

- GPT Understands, Too

GitHub - THUDM/P-tuning: A novel method to tune language models. Codes and datasets for paper ``GPT understands, too''.

A novel method to tune language models. Codes and datasets for paper ``GPT understands, too''. - GitHub - THUDM/P-tuning: A novel method to tune language models. Codes and datasets for pape...

github.com

'인공지능 공부 > NLP 연구' 카테고리의 다른 글

| (NLP연구) 서버 사용법 (0) | 2022.05.10 |

|---|---|

| (NLP) Prompt Learning Translation 연구, 코딩(2022.05.06) (0) | 2022.05.06 |

| (NLP) Prompt Learning 발표 (2022-04-27) (0) | 2022.04.27 |

| (NLP 연구) prompt based learning 04.18 (0) | 2022.04.18 |

| (NLP 연구) The Long-Document Transformer 04.06 발표 (0) | 2022.04.06 |